Speech synthesis, the artificial production of human speech, has been revolutionized by deep learning techniques. This field, also known as text-to-speech (TTS), has broad applications ranging from voice assistants and navigation systems to accessibility tools for the visually impaired. This guide explores the landscape of speech synthesis using deep learning models as of 2019, covering key research and architectural advancements.

Before diving into deep learning approaches, it’s important to acknowledge the traditional methods of speech synthesis: concatenative and parametric. Concatenative synthesis pieces together segments of recorded speech from a large database. While straightforward, it requires extensive databases and struggles to adapt to diverse speaking styles. Parametric synthesis, on the other hand, uses a model to generate speech based on parameters extracted from recorded voices. This approach offers more flexibility, but often compromises on naturalness.

Deep learning offers a more powerful and flexible alternative to these traditional techniques. Let’s examine some notable research and models that emerged as leaders in the field:

WaveNet: A Generative Model for Raw Audio

Developed by Google, WaveNet is a groundbreaking generative model that directly generates raw audio waveforms. This probabilistic and autoregressive model achieved state-of-the-art results in 2019 for both English and Mandarin text-to-speech.

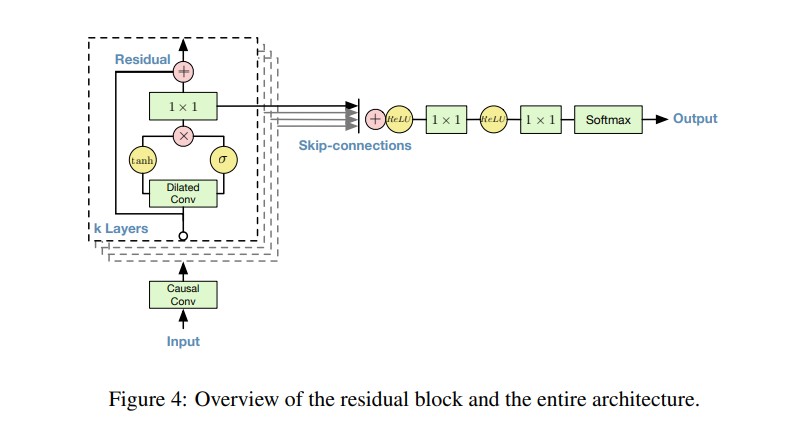

WaveNet builds upon the architecture of PixelCNN, adapting it for audio generation. The core idea is that each audio sample is conditioned on the preceding samples, allowing the model to learn the dependencies inherent in speech. This conditional probability is modeled using a stack of convolutional layers without pooling layers, ensuring the output maintains the same time dimensionality as the input.

The use of causal convolutions is crucial in WaveNet. Causal convolutions guarantee that the model only uses past information to predict future samples, preserving the natural order of speech. While causal convolutions can be computationally expensive due to the need for many layers to achieve a large receptive field, WaveNet overcomes this challenge with dilated convolutions. Dilated convolutions efficiently increase the receptive field, enabling the model to capture long-range dependencies with fewer layers. The model uses a softmax distribution to model the conditional distributions over individual audio samples.

WaveNet’s performance is typically evaluated using the Mean Opinion Score (MOS), a subjective measure of voice quality ranging from 1 to 5, with 5 being the highest quality.

Tacotron: Towards End-to-End Speech Synthesis

Also from Google, Tacotron marked a significant step towards end-to-end speech synthesis. This model directly synthesizes speech from text and audio pairs, achieving a MOS of 3.82 on US English in 2019. Tacotron operates at the frame level, making it faster than sample-level autoregressive methods like WaveNet.

A key advantage of Tacotron is its ability to be trained on audio and text pairs, making it adaptable to new datasets. The model employs a sequence-to-sequence (seq2seq) architecture consisting of an encoder, an attention-based decoder, and a post-processing network.

The encoder converts input characters into a sequence of representations. Each character is transformed into a one-hot vector and then embedded into a continuous vector. Non-linear transformations and dropout layers are added to prevent overfitting, which can improve pronunciation accuracy.

A tanh content-based attention decoder is used to generate spectrograms, which are then converted into waveforms using the Griffin-Lim algorithm. The CBHG (Convolutional Bank Highway Network Bidirectional GRU) module plays a key role in the architecture.

Deep Voice 1: Real-time Neural Text-to-Speech

Baidu’s Silicon Valley Artificial Intelligence Lab introduced Deep Voice, a neural text-to-speech system comprised of five core components:

- A segmentation model for phoneme boundary detection using deep neural networks and connectionist temporal classification (CTC) loss.

- A grapheme-to-phoneme conversion model.

- A phoneme duration prediction model.

- A fundamental frequency prediction model.

- An audio synthesis model based on a streamlined WaveNet variant.

This model uses separate components to handle different aspects of speech synthesis. The grapheme-to-phoneme model converts text into phonemes, while the segmentation model identifies the boundaries of each phoneme in the audio. The duration and frequency models predict the length and pitch of each phoneme, respectively. Finally, the audio synthesis model combines these elements to generate the final audio waveform.

Deep Voice 2: Multi-Speaker Neural Text-to-Speech

Deep Voice 2, also from Baidu, built upon the foundation of Deep Voice 1 by introducing a method for generating diverse voices from a single model using low-dimensional, trainable speaker embeddings. This significantly improved audio quality and enabled the model to learn hundreds of unique voices with relatively little data (less than half an hour per speaker).

While the overall pipeline resembles Deep Voice 1, Deep Voice 2 replaced the Griffin-Lim algorithm with a WaveNet-based spectrogram-to-audio neural vocoder. A key innovation was the separation of the phoneme duration and frequency models. Instead of predicting both jointly (as in Deep Voice 1), Deep Voice 2 first predicts phoneme durations, which are then used as inputs to the frequency model.

The segmentation model in Deep Voice 2 employs a convolutional-recurrent architecture with CTC loss for classifying phoneme pairs. The authors also incorporated batch normalization and residual connections in the convolutional layers.

The speaker-specific voice characteristics are captured by augmenting each model with a low-dimensional speaker embedding vector. Weight sharing across speakers is achieved by storing speaker-dependent parameters in this low-dimensional vector. Speaker embeddings are integrated into multiple parts of the model to ensure the unique voice signature of each speaker is captured.

Deep Voice 3: Scaling Text-to-speech With Convolutional Sequence Learning

The third iteration, Deep Voice 3, showcased a fully-convolutional, attention-based neural text-to-speech (TTS) system designed for parallel computation and scalability.

This architecture uses a fully-convolutional character-to-spectrogram approach, allowing for efficient parallel processing. The model, trained on the LibriSpeech ASR dataset, is an attention-based sequence-to-sequence model that converts textual features (characters, phonemes, stresses) into vocoder parameters such as mel-band spectrograms, linear-scale log magnitude spectrograms, fundamental frequency, spectral envelope, and aperiodicity parameters. These parameters are then fed into the audio waveform synthesis model.

The architecture consists of an encoder, a decoder, and a converter:

- Encoder: A fully-convolutional encoder that transforms textual features into a learned internal representation.

- Decoder: A fully-convolutional causal decoder that autoregressively decodes the learned representations.

- Converter: A fully-convolutional post-processing network that predicts the final vocoder parameters.

Deep Voice 3 utilizes a simple text pre-processing approach: uppercasing input characters, removing punctuation, adding a period or question mark to the end of each utterance, and replacing spaces with a special character to indicate pause length.

Parallel WaveNet: Fast High-Fidelity Speech Synthesis

Google’s Parallel WaveNet tackled the computational bottleneck of the original WaveNet by introducing a method called Probability Density Distillation. This technique trains a parallel feed-forward network, referred to as the “student,” from a pre-trained WaveNet “teacher.” This approach combines the training efficiency of WaveNet with the efficient sampling of Inverse Autoregressive Flows (IAFs).

The student network learns to match the probability of its own samples under the distribution learned by the teacher.

Parallel WaveNet also incorporates additional loss functions to guide the student in generating high-quality audio:

- Power Loss: Ensures the use of power in different frequency bands, mirroring human speech.

- Perceptual Loss: Explores feature reconstruction loss (Euclidean distance between feature maps) and style loss (Euclidean distance between Gram matrices). Style loss was found to produce better results.

- Contrastive Loss: Penalizes waveforms with high likelihood regardless of the conditioning vector.

Neural Voice Cloning with a Few Samples

Baidu Research presented a neural voice cloning system capable of synthesizing a person’s voice from just a few audio samples.

The system utilizes two main approaches: speaker adaptation (fine-tuning a multi-speaker generative model) and speaker encoding (training a separate model to infer a new speaker embedding for application to the multi-speaker generative model). The researchers used Deep Voice 3 as the baseline for their multi-speaker model.

The process involves extracting speaker characteristics from audio samples and generating audio that matches the speaker’s voice, given the available text. Speech naturalness and speaker similarity are key performance metrics. The researchers proposed a speaker encoding method that directly estimates a speaker’s embeddings from audio samples of an unseen speaker.

VoiceLoop: Voice Fitting and Synthesis via A Phonological Loop

Facebook AI Research introduced VoiceLoop, a neural text-to-speech (TTS) technique that transforms text into speech using voices sampled from real-world audio.

VoiceLoop draws inspiration from the phonological loop, a working memory model that holds verbal information briefly. The phonological loop consists of a constantly refreshed phonological store and a rehearsal process that maintains longer-term representations.

VoiceLoop constructs a phonological store by implementing a shifting buffer as a matrix. Sentences are represented as phoneme lists, with each phoneme decoded into a short vector. The current context vector is generated by weighting and summing the encodings of the phonemes at each time point.

VoiceLoop distinguishes itself through its use of a memory buffer instead of conventional RNNs, memory sharing between all processes, and shallow, fully-connected networks for all computations.

Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions

Researchers from Google and the University of California, Berkeley, introduced Tacotron 2, a neural network architecture for speech synthesis from text.

Tacotron 2 combines the strengths of Tacotron and WaveNet. The architecture comprises a recurrent sequence-to-sequence feature prediction network that maps character embeddings to mel-scale spectrograms, followed by a modified WaveNet model acting as a vocoder to synthesize time-domain waveforms from the spectrograms. The model achieved a MOS of 4.53.

Conclusion

By 2019, deep learning had fundamentally reshaped the field of speech synthesis. Models like WaveNet, Tacotron, and the Deep Voice series demonstrated the potential for generating highly realistic and versatile speech. Techniques like parallel processing and voice cloning further expanded the capabilities and applications of these models. This overview provides a snapshot of the leading approaches to speech synthesis with deep learning as of that year, laying the groundwork for future advancements in the field.