Natural Language Processing (NLP) is a rapidly evolving field within Artificial Intelligence (AI), driving innovations like sophisticated chatbots, automated content creation, and advanced text analysis. Recent advancements have significantly enhanced computers’ ability to process and generate human-like text, programming languages, and even biological sequences. A core component of NLP is Natural Language Generation (NLG), which empowers machines to produce meaningful and contextually relevant text. This guide provides a comprehensive overview of NLG, its applications, and the techniques used to create human-quality text.

What is Natural Language Generation (NLG)?

Natural Language Generation (NLG) is a subfield of Natural Language Processing (NLP) that focuses on automatically generating natural language text from structured data or information. It essentially reverses the process of Natural Language Understanding (NLU), where machines interpret and understand human language. NLG enables computers to communicate ideas, present data, and create content in a way that is easily understandable by humans. The goal of NLG is to produce text that is coherent, grammatically correct, and contextually appropriate.

Why is Natural Language Generation (NLG) Important?

NLG is becoming increasingly crucial across various industries. It automates content creation, personalizes customer communication, and enhances decision-making processes. Here are some key reasons why NLG is important:

- Automation: NLG automates the creation of reports, articles, product descriptions, and other text-based content, saving time and resources.

- Personalization: NLG can tailor messages and content to individual users, enhancing customer engagement and satisfaction.

- Data Interpretation: NLG translates complex data into easy-to-understand narratives, aiding in business intelligence and decision support.

- Accessibility: NLG provides alternative communication methods for individuals with disabilities, such as generating audio descriptions from visual content.

Applications of Natural Language Generation (NLG)

NLG has a wide range of applications across various industries. Some of the most prominent examples include:

- Report Generation: NLG systems can automatically generate financial reports, market analysis reports, and scientific reports from structured data.

- Content Creation: NLG can create articles, blog posts, social media updates, and marketing copy, freeing up human writers for more creative tasks.

- Chatbots and Virtual Assistants: NLG enables chatbots and virtual assistants to provide personalized and informative responses to user queries.

- Product Descriptions: E-commerce businesses use NLG to generate unique and compelling product descriptions, improving SEO and sales.

- Email Marketing: NLG can personalize email campaigns, tailoring messages to individual customer preferences and behaviors.

- Data Visualization: NLG complements data visualization by providing textual summaries and explanations of charts and graphs.

Techniques in Natural Language Generation (NLG)

NLG employs various techniques to generate natural-sounding text. These techniques can be broadly categorized into the following approaches:

- Rule-Based NLG: This approach uses predefined rules and templates to generate text. It is suitable for simple and repetitive tasks but lacks flexibility.

- Statistical NLG: This approach uses statistical models trained on large corpora of text to generate text. It is more flexible than rule-based NLG but requires significant training data.

- Neural NLG: This approach uses neural networks, such as recurrent neural networks (RNNs) and transformers, to generate text. Neural NLG models can generate highly coherent and fluent text but are computationally intensive.

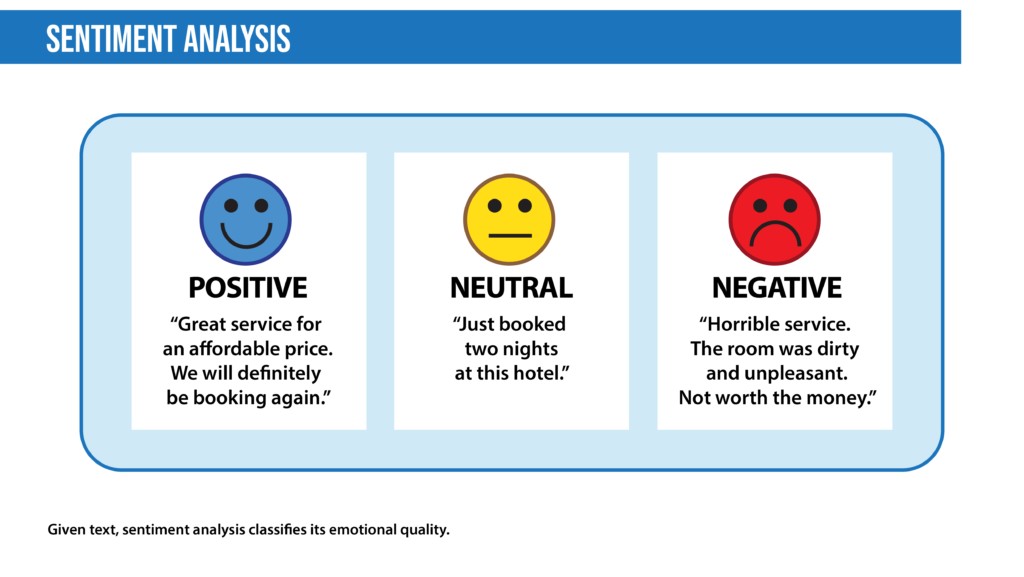

Here are 11 tasks that can be solved by NLP:

Deep Learning Models for Natural Language Generation

Deep learning models have revolutionized NLG, enabling the generation of high-quality text that closely resembles human writing. Some of the most popular deep learning models for NLG include:

- Recurrent Neural Networks (RNNs): RNNs are well-suited for processing sequential data like text. They have been used for various NLG tasks, such as machine translation and text summarization.

- Long Short-Term Memory (LSTM) Networks: LSTMs are a type of RNN that can handle long-range dependencies in text. They are effective for generating coherent and contextually relevant text.

- Transformers: Transformers have become the dominant architecture for NLG due to their ability to process text in parallel and capture long-range dependencies efficiently. Models like GPT-3 and LaMDA are based on the transformer architecture.

- Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator, and a discriminator, that compete against each other. The generator tries to generate realistic text, while the discriminator tries to distinguish between generated and real text.

Key Considerations in Natural Language Generation

Several factors influence the quality and effectiveness of NLG systems. These include:

- Data Quality: The quality and quantity of training data significantly impact the performance of NLG models.

- Coherence and Fluency: NLG systems should generate text that is both coherent (logically connected) and fluent (grammatically correct and natural-sounding).

- Contextual Awareness: NLG models should be aware of the context in which they are generating text to produce relevant and appropriate content.

- Bias and Fairness: NLG systems can perpetuate biases present in the training data. It is crucial to address bias and ensure fairness in NLG applications.

- Evaluation Metrics: Appropriate evaluation metrics are needed to assess the quality of generated text. Common metrics include BLEU, ROUGE, and METEOR.

The Future of Natural Language Generation

NLG is a rapidly evolving field with significant potential for future advancements. Some of the key trends and future directions in NLG include:

- Improved Coherence and Creativity: Future NLG models will be able to generate even more coherent, creative, and human-like text.

- Multimodal NLG: NLG systems will be able to generate text from multiple sources of information, such as images, videos, and sensor data.

- Personalized NLG: NLG will become increasingly personalized, tailoring content to individual user preferences and needs.

- Explainable NLG: There will be a greater focus on making NLG systems more transparent and explainable, allowing users to understand why a particular text was generated.

- Ethical Considerations: Ethical considerations, such as bias, fairness, and misinformation, will play an increasingly important role in the development and deployment of NLG systems.

Getting Started with Natural Language Generation

If you’re interested in getting started with NLG, there are numerous resources available to help you learn and develop your skills.

- Online Courses: Platforms like Coursera, Udacity, and deeplearning.ai offer courses on NLP and NLG.

- Books and Tutorials: Numerous books and online tutorials cover the fundamentals of NLG and deep learning for text generation.

- Open-Source Libraries: Libraries like TensorFlow, PyTorch, and Transformers provide tools and resources for building NLG models.

- Datasets: Publicly available datasets, such as the Penn Treebank and the WikiText corpus, can be used to train and evaluate NLG models.

Conclusion

Natural Language Generation (NLG) is a powerful technology that enables machines to create human-quality text. With applications ranging from automated content creation to personalized customer communication, NLG is transforming various industries. As the field continues to advance, NLG systems will become even more sophisticated, generating text that is more coherent, creative, and contextually aware. By understanding the techniques, challenges, and future directions of NLG, you can unlock its potential to automate, personalize, and enhance communication in a wide range of applications.