Deep learning demands significant computational power. Choosing the right processor, or CPU, is crucial for building an efficient and cost-effective deep learning system. This guide helps you navigate the key hardware components, focusing on how to avoid common and costly mistakes.

Over the years, I’ve assembled seven deep learning workstations. Despite careful planning, I’ve made my share of hardware selection errors. Here, I share my experiences to help you avoid the same pitfalls.

Contents

- Needed RAM Clock Rate

- RAM Size

CPU - CPU and PCI-Express

- PCIe Lanes and Multi-GPU Parallelism

- Needed CPU Cores

- Needed CPU Clock Rate (Frequency)

Hard drive/SSD

Power supply unit (PSU)

CPU and GPU Cooling - Air Cooling GPUs

- Water Cooling GPUs For Multiple GPUs

- A Big Case for Cooling?

- Conclusion Cooling

Motherboard

Computer Case

Monitors

Some words on building a PC

Conclusion / TL;DR

Related

Related Posts

GPU

The GPU is the core of deep learning. If you’re building or upgrading a system, including a GPU is essential. The acceleration in processing speed is too significant to ignore. Your GPU choice is the most important decision. The main mistakes include:

- Bad cost/performance

- Insufficient memory

- Inadequate cooling

For cost-effective performance, consider options like the RTX 2070 or RTX 2080 Ti. These cards support 16-bit models. Alternatives from eBay, such as GTX 1070, GTX 1080, GTX 1070 Ti, and GTX 1080 Ti, are also good choices for 32-bit models.

Memory is a crucial factor. RTX cards, with 16-bit support, can handle larger models with the same memory compared to GTX cards. Memory requirements generally break down as follows:

- Cutting-edge research: >= 11 GB

- Exploring new architectures: >= 8 GB

- General research: 8 GB

- Kaggle competitions: 4 – 8 GB

- Startups: 8 GB (application-specific)

- Companies: 8 GB for prototyping, >= 11 GB for training

Cooling is vital, especially with multiple RTX cards. For adjacent PCIe slots, use GPUs with a blower-style fan to avoid temperature issues, which can reduce performance by up to 30% and shorten lifespan.

Identifying the optimal hardware for peak deep learning performance.

RAM

Common RAM mistakes include buying excessively high clock rates and insufficient memory for smooth prototyping.

Needed RAM Clock Rate

High RAM clock rates are often marketing ploys, providing minimal performance gains.

RAM speed is largely irrelevant for CPU RAM to GPU RAM transfers. If you use pinned memory, mini-batches transfer to the GPU without CPU involvement. Otherwise, faster RAM offers only a 0-3% performance increase. Invest your budget elsewhere.

RAM Size

RAM size does not directly impact deep learning performance but affects workflow. Ensure enough RAM to work comfortably with your GPU, matching the size of your largest GPU. For instance, a Titan RTX with 24 GB of memory requires at least 24 GB of RAM. Adding more GPUs doesn’t necessarily mean needing more RAM.

If you process large datasets, matching GPU memory may still fall short. In this case, buy more RAM as needed.

From a psychological perspective, sufficient RAM conserves mental energy. Instead of struggling with RAM bottlenecks, you can focus on more complex programming challenges. Additional RAM is especially helpful for feature engineering in Kaggle competitions. Therefore, investing in more, inexpensive RAM upfront can save time and boost productivity.

CPU

A primary CPU mistake is overemphasizing PCIe lanes. Focus on CPU and motherboard compatibility with your desired number of GPUs. Another mistake is buying an overly powerful CPU.

CPU and PCI-Express

People often overvalue PCIe lanes. They have minimal impact on deep learning performance. A single GPU primarily uses PCIe lanes to transfer data from CPU RAM to GPU RAM. However, even with limited lanes, transfer speeds are fast.

Consider an ImageNet mini-batch of 32 images (32x225x225x3) using 32-bit:

- 16 lanes: 1.1 milliseconds

- 8 lanes: 2.3 milliseconds

- 4 lanes: 4.5 milliseconds

In practice, PCIe can be twice as slow, but latency remains negligible. For an ImageNet mini-batch with a ResNet-152, timing looks like this:

- Forward and backward pass: 216 milliseconds (ms)

- 16 PCIe lanes CPU->GPU transfer: ~2 ms (1.1 ms theoretical)

- 8 PCIe lanes CPU->GPU transfer: ~5 ms (2.3 ms)

- 4 PCIe lanes CPU->GPU transfer: ~9 ms (4.5 ms)

Upgrading from 4 to 16 PCIe lanes offers only about a 3.2% performance increase. With PyTorch’s data loader and pinned memory, the performance gain is 0%. Don’t overspend on PCIe lanes for a single GPU setup.

When choosing a CPU and motherboard, ensure they support your target number of GPUs, but don’t prioritize PCIe lanes.

PCIe Lanes and Multi-GPU Parallelism

Are PCIe lanes important for multi-GPU data parallelism? Research indicates that with numerous GPUs (e.g., 96), PCIe lanes matter significantly. However, for four or fewer GPUs, the impact is minimal. With 2-3 GPUs, PCIe lanes are not a concern. For 4 GPUs, ensure support for 8 PCIe lanes per GPU (32 total). Since most systems don’t exceed 4 GPUs, additional spending on PCIe lanes isn’t worthwhile.

Needed CPU Cores

To select the right CPU, understand its role in deep learning. The CPU mainly initiates GPU function calls and executes CPU functions.

Data preprocessing is the CPU’s most critical task. There are two main strategies:

- Preprocessing during training: Load mini-batch -> Preprocess -> Train

- Preprocessing before training: Preprocess all data -> Loop: Load preprocessed mini-batch -> Train

The first strategy benefits from a CPU with many cores. The second requires a less powerful CPU. For the first strategy, aim for at least 4 threads per GPU (usually two cores). Each additional core/GPU may provide a 0-5% performance boost.

For the second strategy, a minimum of 2 threads per GPU (one core) is sufficient. More cores provide little performance gain.

Needed CPU Clock Rate (Frequency)

High clock rates are often equated with faster CPUs. While generally true for processors with the same architecture, it’s not always the best performance measure.

The CPU handles limited computations in deep learning, such as variable adjustments, Boolean expressions, and function calls.

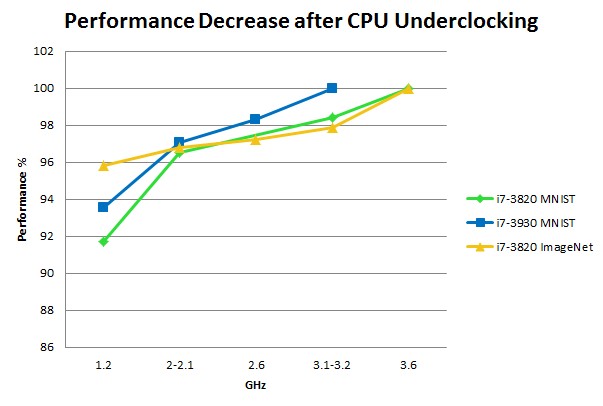

While CPU usage may be at 100% during deep learning, underclocking experiments reveal its impact:

CPU Underclocking Performance

CPU Underclocking Performance

Hard drive/SSD

Hard drives aren’t typically a bottleneck in deep learning. However, improper usage can cause issues. Reading data from disk when needed (blocking wait) with a 100 MB/s hard drive can add 185 milliseconds for an ImageNet mini-batch of size 32. Asynchronously fetching data before use (e.g., with torch vision loaders) loads the mini-batch in 185 milliseconds, aligning with the compute time of approximately 200 milliseconds, preventing performance penalties.

For improved comfort and productivity, use an SSD. Programs start and respond faster, and preprocessing with large files is quicker. An NVMe SSD offers an even smoother experience.

The ideal setup includes a large, slower hard drive for datasets and an SSD for productivity.

Power supply unit (PSU)

Choose a PSU that supports all future GPUs. GPUs tend to become more energy-efficient, making a good PSU a lasting investment.

Calculate required wattage by adding CPU and GPU watts, plus an extra 10% for other components and power spikes. For example, with 4 GPUs at 250 watts TDP each and a CPU at 150 watts TDP, you need a minimum of 4×250 + 150 + 100 = 1250 watts. Adding another 10% yields 1375 Watts, so a 1400 watts PSU is appropriate.

Ensure the PSU has enough PCIe 8-pin or 6-pin connectors for all GPUs.

Buy a PSU with a high power efficiency rating, especially for multiple GPUs running for extended periods.

Running a 4 GPU system at full power (1000-1500 watts) for two weeks can consume 300-500 kWh. At 20 cents per kWh, this costs 60-100€. An 80% efficient power supply increases these costs by an additional 18-26€. While the impact is less for a single GPU, an efficient power supply is a sound investment.

Running GPUs extensively increases your carbon footprint. Consider carbon-neutral options.

CPU and GPU Cooling

Cooling is vital and can become a significant bottleneck, reducing performance more than poor hardware choices. Standard heat sinks or all-in-one (AIO) water cooling solutions are typically adequate for CPUs. However, GPUs require special consideration.

Air Cooling GPUs

Air cooling is reliable for a single GPU or multiple GPUs with spacing. The biggest mistake occurs when trying to cool 3-4 tightly packed GPUs.

Modern GPUs increase speed and power consumption until reaching a temperature barrier (often 80°C), then reduce speed to prevent overheating. Poorly designed fan schedules for deep learning programs can quickly reach this threshold, reducing performance (0-10% for a single GPU, 10-25% for multiple GPUs).

NVIDIA GPUs are optimized for Windows, allowing easy fan schedule adjustments. Linux presents a challenge because most deep learning libraries are designed for Linux.

Linux offers the “coolbits” option in the Xorg server configuration, which works well for a single GPU. However, managing multiple headless GPUs (without a monitor) requires emulating a monitor, which is complex and unreliable.

For 3-4 GPU setups with air cooling, fan design is critical. Blower-style fans expel hot air out the back, drawing in cooler air. Non-blower fans recirculate hot air, causing overheating and throttling. Avoid non-blower fans in 3-4 GPU setups.

Water Cooling GPUs For Multiple GPUs

Water cooling is a more expensive alternative. While not recommended for a single GPU or spaced GPUs, it ensures optimal cooling for 4 GPU setups, which air cooling struggles to achieve. Water cooling is also quieter, ideal for shared workspaces. Each GPU water cooling setup costs around $100 plus upfront costs (approximately $50). Assembly requires extra effort, but detailed guides are available. Maintenance is generally straightforward.

A Big Case for Cooling?

Large towers with additional GPU area fans offer minimal benefit (2-5°C decrease). The direct cooling solution on the GPU is paramount. Don’t overspend on cases for GPU cooling. Focus on GPU fit.

Conclusion Cooling

For a single GPU, air cooling is best. For multiple GPUs, use blower-style air cooling (with a small performance penalty) or invest in water cooling for optimal performance. Air cooling is simpler, while water cooling demands more setup. For water cooling, look for all-in-one (AIO) solutions for GPUs.

Motherboard

Your motherboard should have enough PCIe ports to support your desired number of GPUs (typically up to four). Remember that most GPUs occupy two PCIe slots. Select a motherboard with sufficient space between slots for multiple GPUs. Verify that the motherboard supports your intended GPU configuration by checking the PCIe section on Newegg.

Computer Case

When selecting a case, ensure it accommodates full-length GPUs. Most cases do, but be cautious with smaller cases. Check dimensions and specifications, or search for images of the case with GPUs installed.

For custom water cooling, ensure the case has enough space for radiators, particularly for GPUs. The radiator for each GPU requires space, so confirm that the setup fits within the case.

Monitors

The money spent on multiple monitors is a great investment. Productivity significantly increases when using multiple monitors.

Optimal monitor layout for deep learning workflow: Left – research, middle – code, right – output and system monitoring.

Some words on building a PC

Building a computer can seem daunting, but it’s straightforward. Incompatible components simply won’t fit together. The motherboard manual provides assembly instructions, and countless guides and videos are available.

Building a computer provides valuable knowledge applicable to all computer builds.

Conclusion / TL;DR

GPU: RTX 2070 or RTX 2080 Ti. GTX 1070, GTX 1080, GTX 1070 Ti, and GTX 1080 Ti (eBay) are also good.

CPU: 1-2 cores per GPU (depending on data preprocessing), > 2GHz. The CPU should support the number of GPUs you want to use. PCIe lanes are not a major factor.

RAM:

- Clock rates don’t matter – buy the cheapest.

- Match CPU RAM to the RAM of your largest GPU.

- Buy more RAM only as needed.

- More RAM is useful for frequent work with large datasets.

Hard drive/SSD:

- Hard drive for data (>= 3TB)

- SSD for comfort and preprocessing smaller datasets.

PSU:

- Add up the watts of GPUs + CPU, then multiply by 110% for the required wattage.

- Get a high-efficiency rating if using multiple GPUs.

- Ensure the PSU has enough PCIe connectors (6+8 pins).

Cooling:

- CPU: Use a standard CPU cooler or all-in-one (AIO) water cooling solution.

- GPU: Use air cooling, and get GPUs with “blower-style” fans if buying multiple GPUs. Set the coolbits flag in your Xorg config to control fan speeds.

Motherboard:

- Get as many PCIe slots as needed for your (future) GPUs (one GPU takes two slots; max 4 GPUs per system).

Monitors:

- An additional monitor can enhance productivity more than an additional GPU.