Quantization, a cornerstone technique for optimizing Large Language Models (LLMs), significantly reduces their size and computational demands, which in turn enables deployment on consumer hardware and improves inference speed. CONDUCT.EDU.VN provides accessible educational materials that illuminate complex topics like quantization. This visual guide offers a comprehensive overview of quantization, detailing its methodologies, use cases, and underlying principles, helping you grasp this crucial concept in machine learning and high-performance computing.

1. Understanding the Challenge with Large Language Models (LLMs)

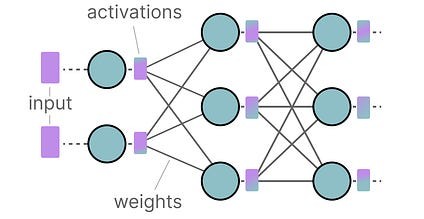

LLMs possess an immense number of parameters, often numbering in the billions, which primarily consist of weights. Storing these parameters demands significant memory.

1.1. Representing Numerical Values in Computing

In computer science, numerical values are frequently represented as floating-point numbers, which include a sign, exponent, and fraction (or mantissa). The IEEE-754 standard defines how bits are used to represent these components.

1.2. Impact of Bit Precision on Memory Usage

The precision of a numerical representation is determined by the number of bits used. The more bits, the greater the precision, allowing for a wider range of values to be represented. This affects both the dynamic range (the interval of representable numbers) and the precision (the distance between two neighboring values).

1.3. The Need for Efficient Representation

Given that a 70 billion parameter model in full-precision (32-bit floating point) requires 280GB of memory, reducing the number of bits to represent parameters becomes a critical task. However, reducing precision can impact the model’s accuracy, necessitating quantization to minimize memory usage while preserving performance.

2. Introduction to Quantization Techniques

Quantization involves reducing the precision of a model’s parameters from higher bit-widths, such as 32-bit floating point, to lower bit-widths, like 8-bit integers. This often results in a loss of precision or granularity.

2.1. Common Data Types in Quantization

-

FP16 (Half Precision): Reducing from 32-bit to 16-bit floating point reduces memory usage but also reduces the range of representable values.

-

BF16 (BFloat16): This format is a truncated version of FP32 that maintains a similar range of values, making it suitable for deep learning applications.

-

INT8 (8-bit Integer): This is an integer-based representation that significantly reduces the number of bits compared to FP32.

2.2. Symmetric vs. Asymmetric Quantization

-

Symmetric Quantization: This method maps the range of original floating-point values to a symmetric range around zero in the quantized space. A common technique is absolute maximum (absmax) quantization, which uses the highest absolute value to perform the linear mapping.

Formula for Symmetric Quantization:

-

Calculate the scaling factor (s):

s = (2^(b-1) – 1) / α

-

Quantize the input (x):

q = round(x / s)

-

Dequantize the value:

x ≈ q * s

-

-

Asymmetric Quantization: This method does not maintain symmetry around zero and maps the minimum and maximum values from the float range to the minimum and maximum values of the quantized range. Zero-point quantization is a common method used in this approach.

Formula for Asymmetric Quantization:

-

Calculate the scaling factor (s):

s = (max_int – min_int) / (α – β)

-

Calculate the zero-point (z):

z = round(min_int – β / s)

-

Quantize the input (x):

q = round(x / s + z)

-

Dequantize the value:

x ≈ (q – z) * s

-

2.3. Range Mapping and Clipping

To mitigate the impact of outliers, clipping can be employed, which involves setting a different dynamic range of the original values so that outliers get the same value. This reduces the quantization error for non-outliers.

2.4. Calibration Techniques

Calibration aims to find a range that includes as many values as possible while minimizing the quantization error. Different calibration strategies are used for weights and activations.

-

Weights: Static values that can be calibrated using percentiles, mean squared error (MSE) optimization, or minimizing entropy (KL-divergence).

-

Activations: Dynamic values that require different strategies due to their variability with each input.

3. Post-Training Quantization (PTQ)

PTQ involves quantizing a model’s parameters after training. It can be divided into dynamic and static quantization for activations.

3.1. Dynamic Quantization

In dynamic quantization, the zero-point and scale factor are calculated for each layer during inference based on the distribution of activations.

3.2. Static Quantization

Static quantization uses a calibration dataset to determine the zero-point and scale factor before inference, which remains fixed during inference.

3.3. Advanced Quantization Techniques: GPTQ and GGUF

-

GPTQ (Generative Pre-trained Transformer Quantization): This technique uses asymmetric quantization layer by layer and is particularly noted for quantizing models to 4 bits.

GPTQ leverages the inverse-Hessian to identify the importance of each weight and redistributes the quantization error to maintain the network’s overall function.

-

GGUF (GPT-Generated Unified Format): This method allows offloading layers of the LLM to the CPU, enabling the use of both CPU and GPU when VRAM is insufficient.

GGUF splits the weights into super and sub-blocks, extracting scale factors from each to perform block-wise quantization, offering different quantization levels to balance performance and precision.

4. Quantization Aware Training (QAT)

QAT involves learning the quantization procedure during training. It tends to be more accurate than PTQ as it considers quantization during the training process.

4.1. Implementing Fake Quantization

During training, “fake” quants are introduced by quantizing the weights (e.g., to INT4) and then dequantizing them back to FP32. This enables the model to account for quantization during loss calculation and weight updates, seeking “wide” minima in the loss landscape to minimize quantization errors.

Quantizing and dequantizing weights to simulate the effects of quantization.

4.2. Benefits of Considering Quantization During Training

QAT helps the model to find weights that are more resilient to quantization, leading to lower loss in lower precision compared to PTQ.

5. Advances in Extreme Quantization: 1-bit LLMs (BitNet)

BitNet represents model weights using only 1 bit, utilizing either -1 or 1, by integrating quantization directly into the Transformer architecture.

5.1. BitLinear Layers

BitNet replaces linear layers with BitLinear layers, which use 1-bit weights and INT8 activations to reduce computational demands.

5.2. Quantization Process in BitNet

-

Weight Quantization: Weights are quantized to 1 bit using the signum function.

-

Activation Quantization: Activations are quantized from FP16 to INT8 using absmax quantization.

5.3. BitNet 1.58b: Ternary Weight Representation

BitNet 1.58b extends the concept by allowing weights to be -1, 0, or 1, offering ternary weight representation.

5.4. The Advantage of Zero

The inclusion of 0 allows for simplified matrix multiplication, which involves only addition and subtraction, improving computational efficiency and enabling feature filtering.

6. FAQ on Quantization

- What is quantization in the context of machine learning?

Quantization is a technique to reduce the precision of the model’s parameters, which in turn reduces the model size and computational cost without significantly impacting performance. - Why is quantization important for large language models (LLMs)?

LLMs are very large and computationally intensive. Quantization helps reduce their size, making them deployable on resource-constrained devices and speeding up inference. - What are the main types of quantization techniques?

The main types include post-training quantization (PTQ) and quantization-aware training (QAT). PTQ is performed after the model is trained, while QAT integrates quantization during the training process. - What are the differences between dynamic and static quantization?

Dynamic quantization calculates quantization parameters (scale and zero-point) on the fly during inference, whereas static quantization uses pre-calculated parameters based on a calibration dataset. - What is the role of calibration in quantization?

Calibration determines the optimal range for quantization to minimize the quantization error. It involves finding a range that includes as many values as possible while preserving accuracy. - How does GPTQ work, and what are its benefits?

GPTQ uses asymmetric quantization and works layer by layer. It redistributes quantization errors based on the importance of each weight, enabling accurate quantization to 4 bits. - What is GGUF, and how does it facilitate CPU offloading?

GGUF is a quantization method that allows offloading layers of a model to the CPU, which enables running large models on systems with limited GPU VRAM. - What is BitNet, and how does it represent model weights?

BitNet represents model weights using only 1 bit (-1 or 1), which drastically reduces model size. It uses quantized activations and incorporates the quantization process into the Transformer architecture. - What is the significance of BitNet 1.58b, and what is its key innovation?

BitNet 1.58b allows weights to be -1, 0, or 1, making it ternary. The inclusion of 0 simplifies matrix multiplication and enables feature filtering, improving computational efficiency. - Where can I find more information about quantization techniques?

CONDUCT.EDU.VN provides comprehensive educational materials and guides on quantization and related optimization techniques. Additionally, research papers and documentation from the GGML repository can be helpful. For further assistance, you can contact CONDUCT.EDU.VN at 100 Ethics Plaza, Guideline City, CA 90210, United States, or via Whatsapp at +1 (707) 555-1234, or visit the website CONDUCT.EDU.VN

7. Conclusion

This visual guide has provided a comprehensive overview of quantization techniques for optimizing large language models. From basic concepts like symmetric and asymmetric quantization to advanced methods like GPTQ, GGUF, and BitNet, quantization enables the deployment of LLMs on various hardware platforms while maintaining acceptable performance.

To further explore the intricacies of LLMs and related topics, visit the official website at CONDUCT.EDU.VN. For any questions or further assistance, feel free to contact us at 100 Ethics Plaza, Guideline City, CA 90210, United States, or via Whatsapp at +1 (707) 555-1234.

Interested in learning more about quantization and its practical applications? conduct.edu.vn offers detailed courses and resources to help you master these skills. Explore our website today and take the next step in your AI journey.